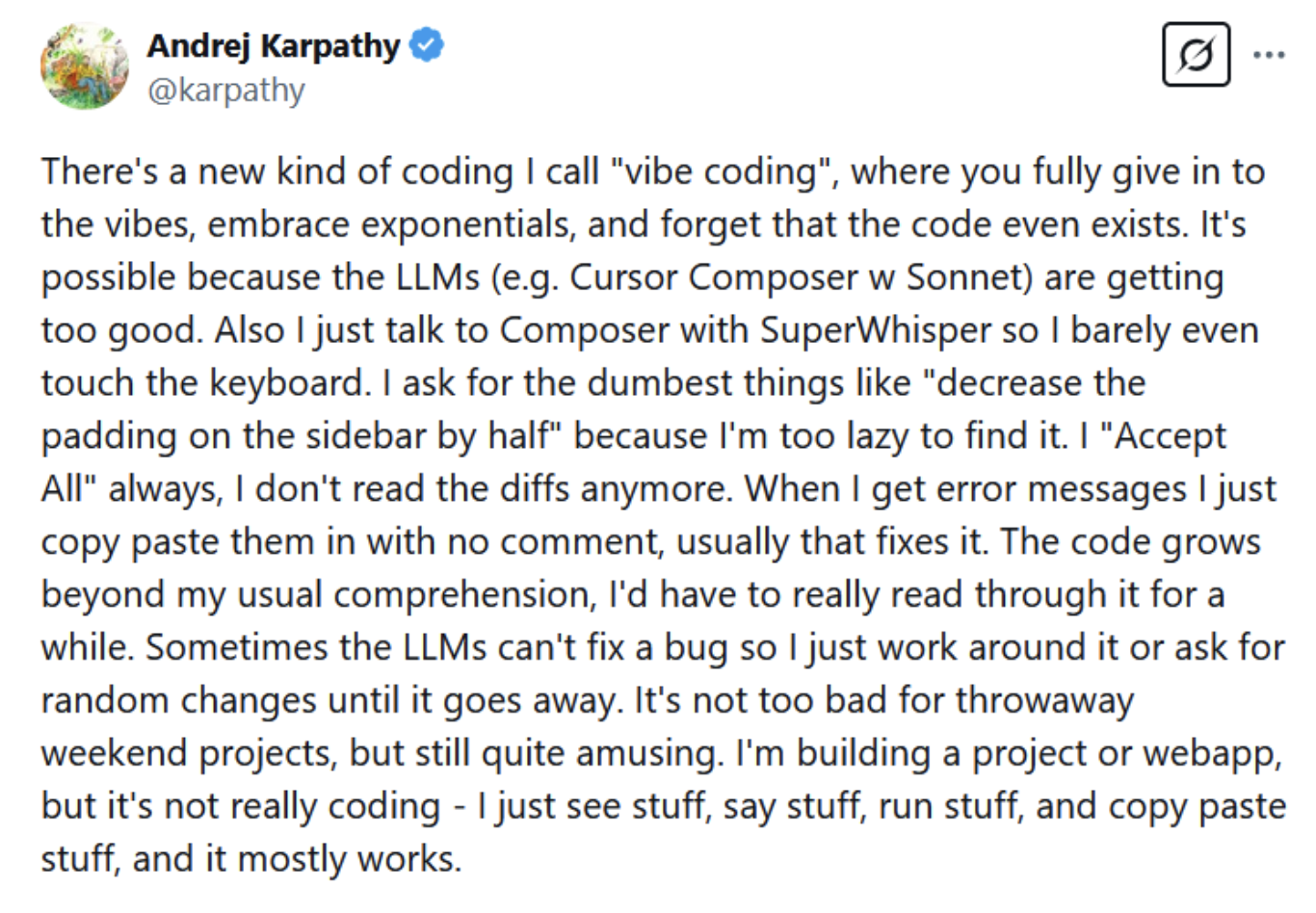

LLM-based programming is tempting for science because people mostly want to spend their time thinking about the problem they’re interested in, rather than writing code. This isn’t helped by the narrative that LLMs have become so good at programming that you don’t even need to think about code any more. Here’s a post from Andrej Karpathy, one of the big names in AI, that I’ve thought about almost every day since I saw it:

Based on my experience with LLM coding, I don’t believe this would really work for science, but it could easily look like it’ll work. I’m convinced this will lead to a new reproducibility crisis, but we could adapt to try and avoid it while recognizing the fact that scientists will still use LLMs no matter how much we warn them about it.

LLM-based scientific computing is something I see people do all the time, and I’ve been pretty against it because you need to ensure the physical correctness of whatever you’re writing. But honestly, at a certain point, your analysis is sufficiently complicated that you can’t trace through every edge case in detail just by understanding what you’re doing line-by-line. I wonder if we’ll see a paradigm kind of like test-driven development for scientific computing in the vibes-based programming era.

The main reason I believe vibes-based programming in the way that Karpathy describes it wouldn’t work for science is the human-in-the-loop validation aspect. In the kinds of quick-hack projects he’s describing, he can immediately see if the padding on the sidebar did decrease by half when he asked for that to happen. And from there, he can either move on to the next thing he wants, or keep asking the LLM to make changes till it passes the immediate visual inspection. Science doesn’t obviously lend itself to those checks, so vibes-based science applied naively would lead to a mess of unphysical/obviously failing results.

However, I think it would be a mistake to say that this is because of the inherent complexity of science. Computational science is complicated, but not more so than the domains in which LLM programming purports to be so good that you don’t need to think about code at all. The key difference isn’t that we have a harder task, it’s that we have a different domain of knowledge that needs to be brought into the loop – the LLM can’t provide that (even if it says it can, or it can approximate having it sometimes). The reason why vibes-based programming is hard to apply to science done well is the same reason test-driven development hasn’t taken off in most computational science applications; the tests that make the most sense for science are often themselves vibes-based!

As scientists, we’re applying our knowledge of our particular scientific domain to the results that we get out of our code. It’s a hard (but often useful) exercise to put that knowledge into the form of concrete tests, but that isn’t done often. We could complain that software practices should be better, as I’ve been known to do a lot, but we recognize this isn’t practical. Basically none of my scientific code is test-driven, because research is hacky and nonlinear. Even though I try to maintain reusable and reproducible code structure because it helps my thought process, in the end I’m checking my results for an amorphous sense of “reasonableness” a lot more often than I’m writing actual tests.

Most of what we’d implement as tests aren’t concrete numerical things, and it’s rarely seen by researchers as being worth the effort to do proper test-driven development. In most cases, you aren’t just checking a result (as in a specific number) is what you expect. You’re checking if a plot looks right, if it obeys a general scaling law, if a curve crosses some physical limit either set by a physical law or just based on your experiential sense of where it should or shouldn’t be. We usually validate code by inspecting the results and going “that’s right” or “that’s wrong”, instead of putting it in a concrete test framework.

As a result, vibes-based science would probably have researchers go into a loop of querying the AI, generating a plot, finding a way in which the plot is wrong, querying the AI again, and/or doing regular debugging. This is pretty inefficient and error-prone if you’re after a final result that just works immediately, because the LLM isn’t going to be tethered to physical reality. It’d be very easy to get a plot that looks right for the wrong reason if that’s the only thing you’re optimizing for, and that would fail on you downstream or lead you to draw the wrong scientific conclusion. And if you don’t have more ways of checking your results, you might never know it happened!

The onus is going to be on the scientist a lot more to come up with better intermediate checks of their results, which is not currently something I see students do a lot, and I would be interested in seeing to what extent active researchers do it. My impression is it’s kind of a subconscious process, and vibes-based scientific programming may require us to make this more explicit.

I don’t even see this as necessarily a bad shift. I think it would be very boomer-programmer of me to go “you should write every bit of logic in exhaustive detail yourself” if my only reasoning for that opinion were “that’s how I had to do it”. I would just be like the people who are still holding on to Fortran because that’s what efficient science looked like in the 70s (cheap shot). The reality is scientists want to spend most of their time doing science and not programming yak shaves. If it works, that’s a good thing. But we also need very strong guardrails to ensure we’re not doing #scientific-fraud ✨

I would be very surprised if we didn’t see at least a few paper retractions as a result of vibes-based programming in a science context, because we just jump into this without thinking through whether a machine that generates plausible-sounding potential lies is a good scientist (it’s not). But the genie may be out of the bottle on this one – it’s hard to convince students not to use something when it seems to them it dramatically cuts down their time on task. Telling them about how LLMs work and their potential limitations can help, but science is still mostly done by people who learned to program in the pre-LLM era. In the next few years we’ll have graduate students running their own computational projects having only learned AI-assisted programming – how much do we trust that they’ll all always ignore the quick, easy, and possibly wrong response?

This doesn’t mean if you learned programming with an AI assistant you’ll inevitably end up with a wrong result – it means we’ll all need to be more conscientious about how we do validation. This isn’t even really a new requirement! The guardrails that you would want to put on AI-assisted computational science are the same guardrails you would want to put on code that you wrote yourself, or that someone else wrote. A lot of theoretical work is based on packages that other people wrote, and you can do full research projects without ever having to look at the source code for those projects. You just implicitly trust their correctness because someone else has done the validation, and you spend your time working with the outputs of these packages and running analyses that are informed by your understanding of the physics. If scientists want to spend most of their time thinking about how they’ll check a result, the computational tools and training they receive should support that!

So would I prefer it if scientists eschewed LLMs entirely? I think that’s an equivalent question to a 2012 astronomer asking if the field should avoid Python in favor of IDL. I may have a personal preference, and I doubt I’ll be doing any vibe science any time soon (Copilot in VSCode was immediately so annoying with so many wrong suggestions that I turned it off within a day) but it’s going to happen without any consideration of what I’d prefer. However, I think this is a good opportunity to make sure we’re critical about the processes and results in computational science: if you are going to use an LLM, and you believe its results, you should be able to articulate exactly why in the same way you’d be able to if you wrote the code yourself.